Table of Content

The Call: 3 AM, and My Heart Sinks

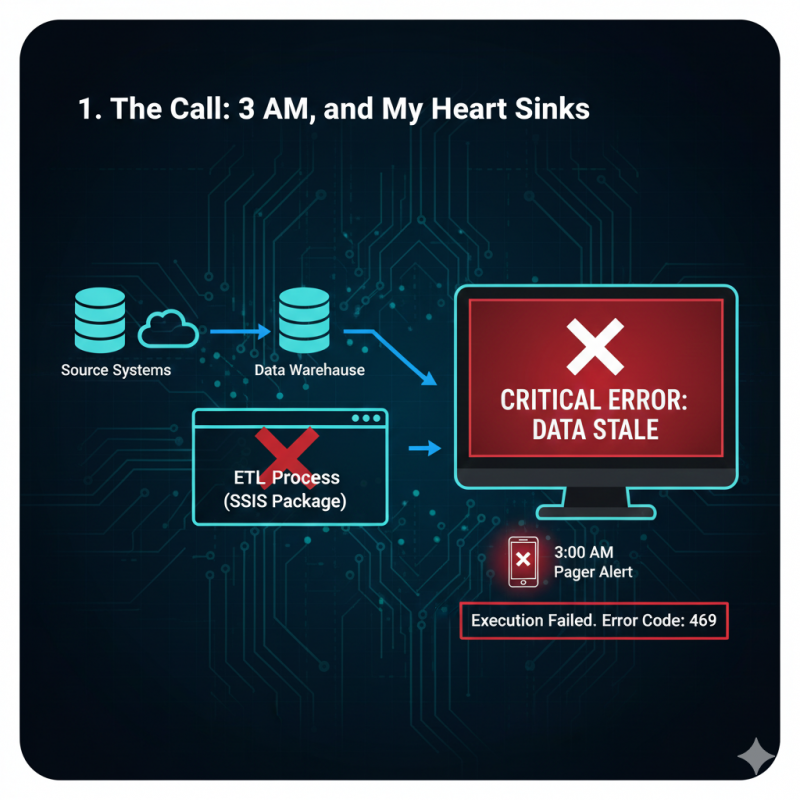

It was 3:07 AM. My phone vibrated with that distinct, gut-wrenching "critical alert" tone. My first thought? "No. Not again." I rolled over, blinking sleep from my eyes, and saw the message: "ETL Job Failed - Dashboard Data Stale." My heart sank. This wasn't just an ETL job; this was the ETL job, the one that fed the entire executive dashboard. And the last time it failed, the post-mortem meeting felt like a public interrogation.

I scrambled out of bed, fired up my laptop, and connected to the server. There it was, glowing ominously red in the SQL Server Agent logs: "Execution Failed. Error Code: 469." That damn number. It’s like a digital middle finger from the server—vague, unhelpful, and deeply, personally irritating. I knew I was in for a long night.

My First Suspect: The Sneaky Permission Monster

My first thought, always, when I see a 469, is permissions. I've been burned by this so many times. It goes like this: I, the brilliant data engineer, build the SSIS package in Visual Studio. It runs flawlessly because my user account has admin access to everything. But when it runs on the server, it's under a specific Service Account (or a SQL Agent Proxy account), and that account is often treated like a second-class citizen.

The Check: I immediately logged onto the server. I tried to connect to the source database as that Service Account. Nope. Connection denied. I tried to read the input CSV file from the shared network drive. Access Denied. "Aha!" I thought, a glimmer of hope.

The Solution (and the Frustration): I fired off an urgent email to the DBA (bless their hearts, they're probably used to my 3 AM pleas). "Hey, I need READ access on SourceDB.dbo.SalesData and READ/WRITE on DestinationDB.dbo.AggregatedReports for DOMAIN\MySSISServiceAccount." A few minutes later, the reply came: "Done." I re-ran the job. Still 469. My heart sank again. It wasn't just permissions this time.

My Second Suspect: The Diva of Data Flow (Metadata Mismatch)

Okay, if permissions weren't the sole issue, the next culprit in the 469 mystery is usually a metadata mismatch. This is where someone, somewhere, changed something in a source system, and my SSIS package, which expects things to be exactly as they were, throws a fit.

The Scenario: A new intern added a column to the sales table, or perhaps someone changed a VARCHAR(50) to a NVARCHAR(200) without telling anyone. My SSIS package's internal definition of that table is now out of date. When it tries to read the "old" column that no longer exists, or convert a data type it wasn't expecting, it just chokes. Instead of saying "Hey, column XYZ is gone!", it just gives me the vague, infuriating 469.

The Fix: I opened the SSIS package in Visual Studio (SSDT). I systematically went through every OLE DB Source and Destination component. For each one, I clicked "Columns" and watched if any errors popped up. Then, I right-clicked the component and selected "Refresh Metadata." After refreshing everything and saving the package, I redeployed it. I hit "Execute." Still 469. My coffee was getting cold. My exasperation was growing.

The Deep Dive: Unmasking the Real Villain with Verbose Logging

By this point, it was almost 4:30 AM. Permissions were good, metadata was refreshed. This meant the problem was deeper. This is where you pull out the big guns: Verbose Logging.

The Pain of Default Logs: The default SSIS logging is awful. It tells you a task failed, but rarely why. It’s like being told you missed your flight, but not which airline, airport, or destination.

Activating Verbose: I went into the SSIS Catalog (SSISDB) in SSMS, right-clicked the SSISDB node, and went to "Configure Project Logging." I set the logging level to "Verbose." This is like turning on all the lights in a dark room. It generates a ton of log data, but usually, somewhere in that wall of text, the real error message is hidden.

The Revelation: I re-ran the job for the fourth time. This time, after it failed (of course), I opened the execution report, clicked on the "Messages" tab, and started scrolling. Past all the successful task initiations, past all the usual noise, there it was: "The connection manager 'MySalesConnection' is being used by another data flow task simultaneously."

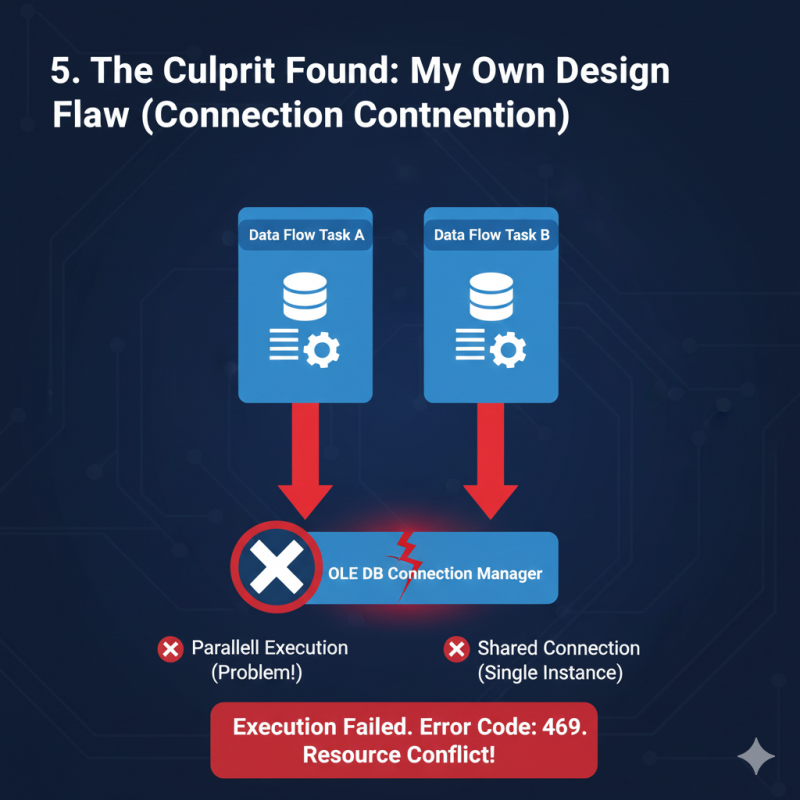

The Culprit Found: My Own Design Flaw (Connection Contention)

My facepalm could be heard across the empty office. I was guilty. I had designed two separate Data Flow Tasks to run in parallel (to speed things up, naturally), and both of them were trying to use the exact same OLE DB Connection Manager object at the same time to hit the same database.

The Scenario: Task A would grab MySalesConnection, start extracting data. Then, Task B, running concurrently, would try to grab MySalesConnection as well. The database couldn't handle two simultaneous, distinct operations through a single, shared connection object in that specific way, so it threw an error, and SSIS, in its infinite wisdom, translated it to a 469.

The Fix (Finally!): I opened the package one last time. For Task B, instead of just pointing it to the existing MySalesConnection from the project, I right-clicked the Connection Manager area and created a new instance of the connection, even if it pointed to the same server. This effectively gave each parallel task its own dedicated connection to use, preventing the fight for resources.

Victory (and a Cold Shower): The Sweet Taste of Green

I redeployed the package, took a deep breath, and hit "Execute." This time, I watched the execution report with bated breath. The tasks slowly turned green. The data flowed. The final success message popped up.

It was 5:15 AM. The dashboard was updated. The world was safe. I collapsed into my chair, feeling both exhausted and triumphant. This was the life of a data engineer: hours of frustration, followed by a fleeting moment of pure satisfaction when you finally squash that elusive bug. SSIS Error 469 wasn't just a technical problem; it was a personal challenge, and this time, I won. Now, for a very cold shower and a lot more coffee.

Architectural Habits to Banish SSIS 469

Habit #1: Externalize Credentials—Never Hard-Code Passwords

The most common reason for a 469 failure is the Service Account not being able to decrypt the passwords stored in the package. Why? Because I, the developer, saved them using my local Windows key. This is a classic rookie mistake.

The Problem I Solved: The default setting, EncryptSensitiveWithUserKey, is fantastic for development, but useless on the server. The server runs under a different user (the Service Account), which can't read my personal keys. The job fails, and I get 469.

The Fix: The SSIS Catalog is Your Best Friend. I now save all sensitive information—passwords, connection strings, server names—in the SSIS Catalog (SSISDB) Environment Variables. This centralizes management. When deploying, I simply map the package’s connection managers to those secure, server-side variables. The catalog handles the encryption, and the Service Account only needs access to the catalog, not my local key. This is the single biggest defense against permission-related 469 errors.

Habit #2: Go Modular—Embrace the Master-Child Workflow

Before my 469 disaster, I had one giant SSIS package that did everything: extracted data, transformed it, updated dimensions, and aggregated facts. If any single task failed, the entire thing blew up, and I got that nasty 469.

The Problem I Solved: Single points of failure. If the dimension update task failed due to a unique key violation, the fact loading task couldn't run, and the whole package died dramatically.

The Fix: The Master-Child Pattern. Now, I break my process into smaller, independent packages:

Master Package: This is a simple control flow that uses the Execute Package Task to call the children.

Child Packages: These contain the actual logic (e.g., UpdateDimensions.dtsx, LoadFacts.dtsx).

The Benefit: If UpdateDimensions.dtsx fails, the Master package logs the failure, sends an alert about that specific problem, and can often continue to the next task (or handle the failure gracefully). This drastically reduces the scope of failure and ensures that a single small issue doesn't paralyze the entire ETL process.

Habit #3: Design for Chaos—Integrate Resilience

I finally accepted that network traffic, server reboots, and transient database issues are just a fact of life. My pipelines needed to be built assuming chaos, not perfection.

The Problem I Solved: Intermittent 469 errors. These happen maybe once a week—a brief network hiccup, the server paused for maintenance—but they still break the job.

The Fix: Automated Retries and Dedicated Connections.

Retry Logic: I now use the For Loop Container around critical tasks (like a slow external API pull or a sensitive database connection). I configure the loop to iterate, say, three times, with a short delay. If the task fails on the first try (due to a transient 469), the loop automatically retries. If it fails after three tries, then it throws a hard error. This eliminates most random failures.

Dedicated Connections: To avoid the connection contention that gave me that 469 nightmare, I make sure every parallel task gets its own instance of a Connection Manager object, even if they point to the same database. This prevents resource fighting.

By implementing these three habits, externalizing credentials, modularizing the design, and building in retry resilience, I haven't seen a true, mysterious 469 error in months. The 3 AM calls are thankfully a thing of the past!

Post Comment

Be the first to post comment!