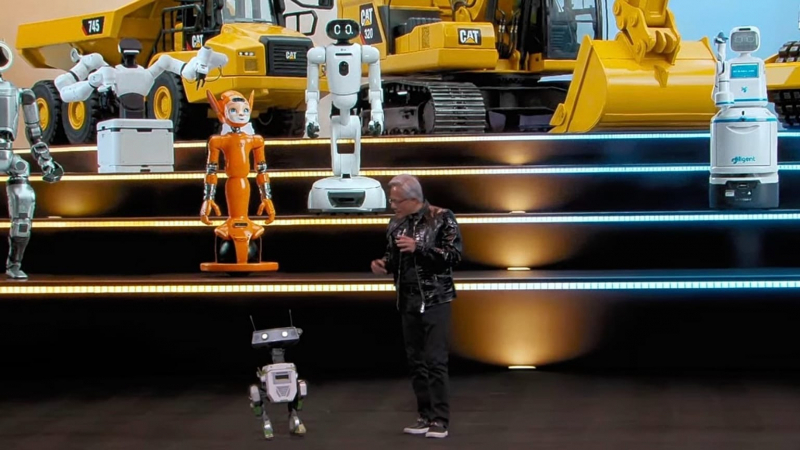

In a move that industry experts are calling the dawn of a new era for physical artificial intelligence, NVIDIA has officially pulled back the curtain on its Alpamayo platform at CES 2026. Described by CEO Jensen Huang as the "ChatGPT moment for physical AI," Alpamayo represents a radical shift from traditional self-driving software toward a system that can perceive, reason, and explain its actions with human-like logic. This family of open-source models and simulation tools is designed to conquer the "long-tail" of rare and unpredictable road scenarios that have historically hindered the full-scale deployment of Level 4 autonomous driving.

At the heart of this announcement is Alpamayo 1, a groundbreaking vision-language-action (VLA) model featuring 10 billion parameters. Unlike previous generations of autonomous vehicle software that relied on rigid, rule-based programming, Alpamayo 1 utilizes a "chain-of-thought" reasoning process. This allows the vehicle to break down complex environments such as a chaotic intersection during a power outage or an undocumented construction zone into logical steps. Rather than just following a pre-set trajectory, the model can actually verbalize its decision-making logic, providing an unprecedented level of transparency and safety that could finally bridge the trust gap between machines and human passengers.

In a significant departure from proprietary industry norms, NVIDIA is releasing Alpamayo 1 as an open-source resource on the machine learning platform Hugging Face. This means developers, researchers, and global automakers can now access the model’s weights and fine-tune the technology for specific regional needs or safety requirements. To support this ecosystem, NVIDIA is also providing the AlpaSim simulation framework and a massive "Physical AI" open dataset. This collection includes over 1,700 hours of multi-sensor driving footage captured across 25 countries and 2,500 cities, offering the global community a standardized foundation to test and validate autonomous systems in high-fidelity virtual environments before they ever touch asphalt.

The real-world applications of this technology are arriving sooner than many anticipated. During the keynote, Huang confirmed that Mercedes-Benz will be the first to integrate Alpamayo-driven intelligence into its production line, starting with the new CLA model expected to hit U.S. roads later this year. The collaboration aims to deliver sophisticated point-to-point navigation that feels more intuitive and "human" to the driver. Other major players, including JLR, Lucid, and Uber, have already signaled their intent to leverage the Alpamayo stack to accelerate their own robotaxi and consumer vehicle roadmaps.

Beyond the immediate automotive benefits, Alpamayo represents a broader strategic pivot for NVIDIA into the realm of general-purpose robotics. By standardizing how machines reason about the physical world, NVIDIA is positioning itself as the foundational architect for a future where billions of autonomous devices from delivery bots to humanoid assistants can operate safely alongside humans. As the industry moves away from black-box algorithms and toward explainable, reasoning-based AI, Alpamayo stands as the most significant open-source contribution to mobility since the inception of the self-driving dream..

Post Comment

Be the first to post comment!