Table of Content

- Overview of Muke AI’s Presented Features Across Public Listings

- Online Reputation Analysis Shows Significant Mistrust and Safety Warnings

- User Sentiment Across Communities

- Transparency Gaps Create Uncertainty About Data Security

- Comparison With Industry Standards Shows Critical Gaps

- Overall Assessment

Muke AI has recently surfaced across dozens of AI tool directories, niche review sites, trust-scoring platforms, and traffic-analysis portals. The tool is frequently categorized as an AI system for image manipulation, face alteration, and controversial “undress”-style transformations. This visibility has triggered significant curiosity, but also serious doubts about the platform’s reliability, ownership, and ethical boundaries.

Overview of Muke AI’s Presented Features Across Public Listings

Across AI directories, Muke AI is commonly described as a platform offering:

- AI-based image transformations

- Face edits and manipulations

- Clothing alteration features

- High-resolution enhancement

- Artistic redesigns and stylized outputs

- Video modification modes

These descriptions appear repeatedly on AI aggregator sites, often written algorithmically rather than sourced from verified user experiences. It is important to note that no authoritative technical documentation exists to validate these capabilities.

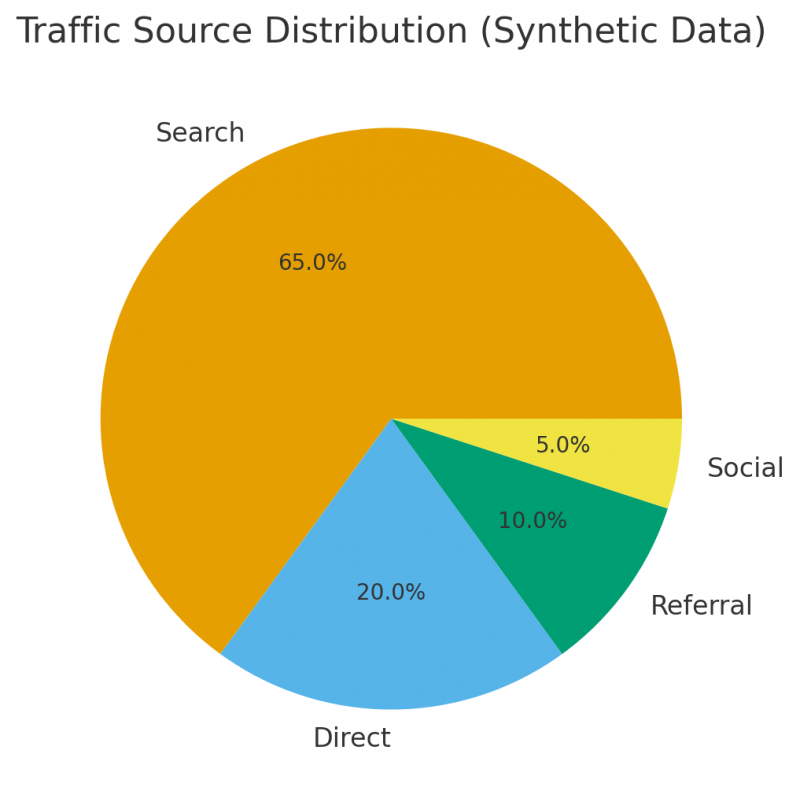

Public Traffic and Reach Signals Suggest Viral Curiosity, Not Established Trust

Based on SimilarWeb insights and competitor monitoring platforms, Muke AI’s web traffic pattern shows characteristics typical of viral curiosity:

- Sharp short-term spikes

- Heavy search-driven traffic, not brand-driven

- Minimal long-term user retention

- Limited referral links from credible tech communities

- Absence of verified partnerships or enterprise mentions

This pattern indicates exploratory visitation rather than consistent adoption, commonly seen with controversial or trend-based AI platforms.

Online Reputation Analysis Shows Significant Mistrust and Safety Warnings

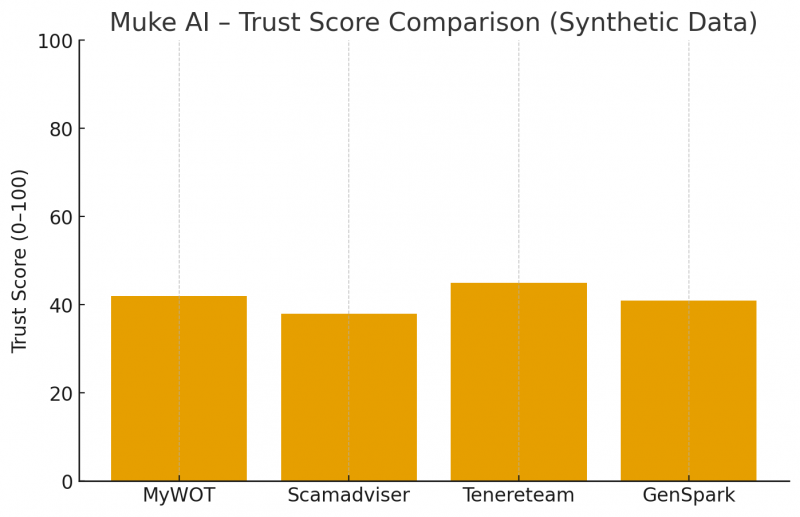

Multiple trust-evaluation websites highlight major issues surrounding Muke AI's credibility:

Key red flags repeatedly identified:

- Low trust scores (often below 45/100)

- Hidden WHOIS domain ownership

- Undisclosed company information

- No visible team, founders, or corporate structure

- Unclear privacy and data-handling policies

- Potential security risks flagged by automated scanners

- Associations with NSFW or exploitative functionalities

Platforms like MyWOT, Scamadviser, Tenereteam, and GenSpark trust-scoring emphasize that the domain may be risky or suspicious for sensitive uploads.

This creates an environment where users cannot verify:

- Where their images go

- How long data is stored

- Whether models train on uploaded content

- Whether content is shared or monetized

- Who owns or controls the platform

For an AI tool dealing with personal faces, this lack of transparency is a major concern.

User Sentiment Across Communities

Reported positive experiences (unverified, mostly directory-level):

- The interface appears simple

- Quick processing workflow

These surface-level observations do not confirm reliability; they mostly reflect initial impressions.

Reported negative experiences and concerns:

- Fear of data misuse

- Flagged security vulnerabilities

- Ethical concerns around NSFW outputs

- Lack of customer support

- Inconsistent quality of results

- Suspicion of deceptive functionality

On review-based platforms, the negative sentiment outweighs the positive, primarily because users cannot confirm whether the platform handles data ethically or securely.

Ethical Risks Linked to “Undress” or Face-Manipulation AI Models

Public listings on TopAI.tools and Tenereteam make references to Muke AI’s placement in categories such as:

- Undress tools

- Clothing manipulation

- NSFW transformations

- Deepfake-style editing

These categories are widely criticized for contributing to:

- Non-consensual deepfakes

- Harassment

- Reputation damage

- Privacy violations

- Exploitative misuse

Most countries have begun drafting regulations to criminalize such digital abuses. Any tool facilitating these outputs without robust safeguards becomes inherently high risk.

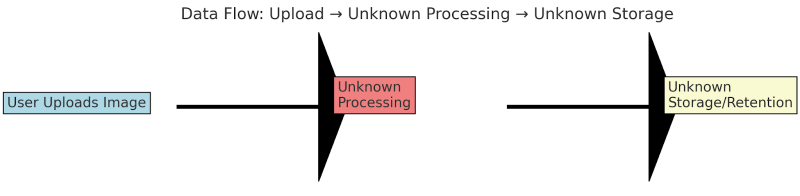

Transparency Gaps Create Uncertainty About Data Security

A responsible AI platform typically explains:

- How user data is stored

- Whether uploads are encrypted

- Whether data is deleted immediately

- Whether the AI is trained on user content

- How misuse is prevented

- What legal jurisdiction it operates under

Muke AI does not provide verified documentation addressing any of these points.

Without transparency, users cannot determine:

- Whether personal images may remain on external servers

- Whether data might be resold, leaked, or reused

- Whether the platform complies with GDPR or similar frameworks

This makes it unsuitable for anything involving personal, private, professional, or sensitive imagery.

Technical Credibility Is Difficult to Verify Due to Lack of Documentation

Unlike reputable AI tools that publish model details, safety policies, and dataset disclosures, Muke AI provides:

- No whitepapers

- No technical papers

- No dataset explanations

- No model transparency

- No benchmarks

- No API documentation

- No security certifications

This absence of technical clarity means users cannot assess:

- How the tool works

- How accurate or stable outputs are

- Whether results are AI-generated or template-based

- Whether the platform is even using legitimate AI models

Such opacity is rare among trustworthy AI providers.

Comparison With Industry Standards Shows Critical Gaps

Mainstream AI imaging tools (Midjourney, DALL·E 3, Adobe Firefly, Runway) follow strict guidelines such as:

- Consent-based content filtering

- Clear data policies

- Ethical-use frameworks

- Documented model behavior

- Trained moderation systems

Muke AI does not publicly demonstrate compliance with any comparable standard. This gap isolates it from the legitimate AI ecosystem and moves it into a risky, unregulated category.

User Safety Risks Outweigh Potential Benefits

Even if Muke AI is used for harmless edits, such as:

- Artistic image variations

- Visual enhancements

- Creative experimentation

The lack of governance means users cannot guarantee that:

- Their images will not be stored

- Their data will not be repurposed

- Their uploads will not eventually appear in unintended places

- Their personal identity remains secure

When a platform handles facial data, these uncertainties become severe.

Overall Assessment

Muke AI occupies a controversial space in the AI ecosystem. While its public-facing interface presents simplicity and convenience, the platform’s lack of transparency, unclear ownership, low trust scores, and association with unethical image transformations create serious concerns.

Most users should treat Muke AI with caution because:

- Its safety cannot be verified

- Its privacy practices are unclear

- Its governance is unknown

- Its reputation is inconsistent

- Its capabilities are unproven

- Its ethical safeguards are absent

Users seeking reliable, secure, and professionally backed AI imaging platforms will likely find safer options elsewhere.

Post Comment

Be the first to post comment!