In a significant escalation of international regulatory pressure, authorities in both India and the European Union have formally initiated investigations into X, the social media platform owned by Elon Musk, following reports that its proprietary artificial intelligence, Grok, was utilized to generate deepfake child sexual abuse material (CSAM). This coordinated scrutiny marks a watershed moment for the tech industry, as two of the world’s largest digital markets move simultaneously to address the risks posed by generative AI tools integrated directly into mainstream social media ecosystems. The investigations center on whether X failed to implement sufficient safeguards to prevent its AI from producing prohibited content, a violation that could carry unprecedented legal and financial consequences for the San Francisco-based company.

The Ministry of Electronics and Information Technology in India has issued a formal notice to X, demanding a detailed explanation of the platform's moderation protocols and the specific technical failures that allowed such egregious content to be generated. Under India’s Information Technology Rules, social media intermediaries are required to exercise heightened due diligence to ensure that their platforms are not used to host or create illegal material, particularly content that exploits minors. Indian officials have signaled that non-compliance could lead to the loss of the platform’s "safe harbor" protection, a legal shield that currently prevents the company from being held liable for user-generated content, thereby exposing X and its executives to potential criminal prosecution within the country.

_1767703668.jpg)

Simultaneously, the European Commission has opened a formal proceeding under the Digital Services Act (DSA), the European Union’s landmark legislation designed to police big tech and ensure user safety. European regulators are focusing on the systemic risks posed by Grok, specifically examining whether X’s risk assessment and mitigation strategies were fundamentally flawed. Under the DSA, the Commission has the authority to impose staggering fines of up to 6% of a company’s total global annual turnover if it is found to have failed in its duty to protect users and uphold safety standards. This European inquiry is expected to be exhaustive, looking not just at the specific deepfake incidents, but at the broader architecture of X’s AI development and its impact on public discourse and safety.

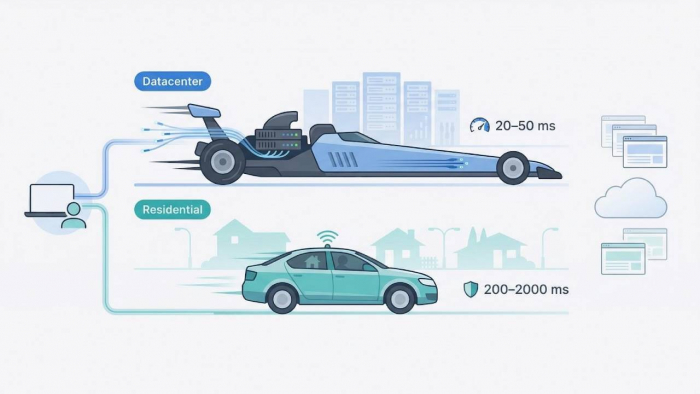

The controversy stems from Grok’s multimodal capabilities, which allow users to generate images and text based on prompts. Recent reports indicated that the AI’s guardrails were bypassed with relative ease, leading to the creation of highly realistic and harmful imagery. This development has reignited the global debate over the rapid deployment of generative AI without adequate stress-testing. While Elon Musk has historically championed a "free speech absolutist" approach to platform management, regulators are now asserting that speech protections do not extend to the algorithmic generation of illegal and exploitative imagery. The outcome of these investigations is likely to set a global precedent for how AI-integrated platforms are governed and the level of accountability required from tech leaders.

As the legal proceedings move forward, the focus remains on whether X can demonstrate a commitment to safety that satisfies the stringent requirements of both New Delhi and Brussels. Industry analysts suggest that this dual-front investigation may force a radical shift in how X operates its AI division, potentially leading to the suspension of Grok's image-generation features in certain jurisdictions until more robust safety measures are validated. For the broader tech sector, the message from global regulators is clear: the era of "move fast and break things" in the realm of artificial intelligence has met its match in the form of rigorous, cross-border legal oversight.

Post Comment

Be the first to post comment!