Automated phone calling in 2026 looks very different from the robocalls people learned to distrust. The technology has shifted away from blunt volume and toward context-aware conversations, tighter compliance controls, and systems that decide when not to speak at all.

Most modern platforms now sit between traditional dialers and full conversational agents. They combine speech recognition, intent detection, and AI-generated voice to handle calls that are repetitive, time-sensitive, or operationally expensive for human teams. Used carefully, they remove friction. Used poorly, they amplify it.

This article breaks down how automated phone calling actually works today, the features that determine whether it succeeds or fails, and the tools teams compare when deciding how much automation to introduce and where to draw the line.

What people usually mean when they say “automated phone calling”

In 2026, automated phone calling usually refers to systems that can:

● Place or receive calls without a live agent

● Listen to spoken input using speech-to-text

● Decide what to do next using natural language understanding

● Respond with text-to-speech voices that sound conversational

● Log outcomes into CRMs or internal systems

Some setups still rely on humans for escalation. Others are fully autonomous for narrow tasks like reminders or lead qualification. The difference matters, because it changes cost, compliance exposure, and failure modes.

Features that define automated phone calling in 2026

Automated phone calling systems in 2026 are no longer defined by dialing alone. What separates usable systems from noisy ones is a set of operational features that control pace, context, and risk.

Conversational intent handling is now foundational. Modern systems do not wait for exact phrases. They classify intent even when callers interrupt, hesitate, or change direction mid-sentence. This is what allows calls to feel adaptive instead of scripted.

Low-latency response handling matters more than voice quality. Systems that respond in under one second maintain conversational flow. Anything slower is perceived as artificial and increases hang-ups.

Predictive, progressive, and agentless dialing modes coexist. Teams choose between them based on risk tolerance. Predictive dialing optimizes volume. Progressive dialing trades speed for control. Agentless calling removes humans entirely for narrow tasks like reminders or confirmations.

Real-time monitoring and AI call summaries replace manual QA. Supervisors can see intent paths, interruption points, and drop-offs without listening to full recordings. This shortens iteration cycles dramatically.

Compliance enforcement at the system level is mandatory. DNC scrubbing, consent verification, STIR and SHAKEN authentication, and one-tap opt-outs are now built into call logic rather than added later.

Post-call data extraction and syncing quietly delivers most ROI. Names, confirmations, objections, sentiment flags, and opt-outs are logged automatically into CRMs and analytics tools without human cleanup.

These features are less visible than voice demos, but they determine whether automation scales without backlash.

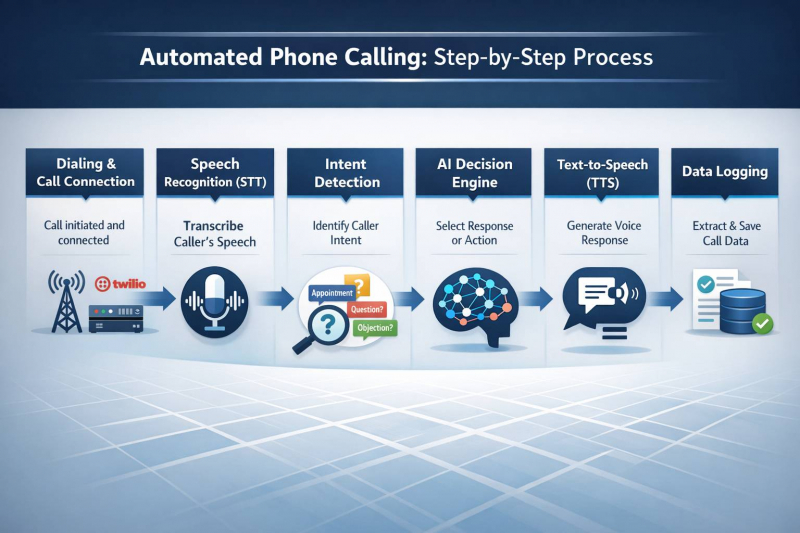

The mechanics, step by step, without the gloss

Most modern systems follow a similar internal flow, regardless of vendor.

Step 1: Dialing and call connection

The system initiates a call through a carrier layer, often using providers like Twilio or similar infrastructure. Caller ID authentication is applied at this stage to reduce spam filtering.

Step 2: Speech recognition (STT)

Once the call connects, the caller’s audio is streamed into a speech-to-text engine. This transcription happens continuously, not after the speaker finishes.

Step 3: Intent detection

The transcribed text is analyzed to determine intent. The system classifies whether the caller is confirming, questioning, objecting, or requesting something unexpected.

Step 4: AI decision engine

Based on detected intent and prior context, the system selects the next action. This may be a scripted response, a clarification question, or a triggered workflow such as updating a CRM or scheduling a callback.

Step 5: Text-to-speech response (TTS)

The selected response is converted into voice and delivered back to the caller. Systems that keep this loop under one second preserve conversational rhythm.

Step 6: Data logging and extraction

After the call ends, structured data is extracted. Confirmations, opt-outs, sentiment signals, and key entities are logged automatically. This is where automation replaces hours of manual tagging.

This flow is largely consistent across vendors. What differs is how reliably each step performs under real-world conditions.

The tools people compare in 2026, and how they differ in practice

Below are five tools that show up consistently in discussions about automated phone calling. They are grouped here not as recommendations, but as reference points.

1. Lindy

Lindy is designed around conversation quality first, not dialing efficiency. Its core strength is maintaining natural back-and-forth exchanges even when callers interrupt, hesitate, or deviate slightly from the expected path.

The visual builder lowers setup friction for non-technical teams, which is why Lindy is often adopted by operations or support teams rather than engineering-led orgs. Multi-language support is not just a translation layer. Intents are detected per language, which reduces misclassification in international campaigns.

Where Lindy becomes less attractive is scale economics. As call volume increases, per-minute costs tied to higher-end voice models add up quickly. Teams using Lindy tend to reserve it for high-context calls, such as appointment coordination, qualification, or follow-ups where tone and accuracy matter more than throughput.

Lindy fits best when calls are fewer, longer, and expected to feel human.

2. Synthflow

Synthflow sits between scripted IVR systems and fully custom voice stacks. Its no-code builder allows teams to define intent flows, data capture points, and fallback logic without writing code.

One of its defining characteristics is real-time structured data extraction. During calls, Synthflow can capture answers and immediately pass them into downstream systems, which makes it well suited for lead intake, surveys, and qualification workflows.

The dependency on external telephony providers introduces both flexibility and friction. Teams can choose their carrier and voice engines, but troubleshooting often spans multiple systems. For teams comfortable managing that complexity, Synthflow offers control without committing to a single vendor stack.

Synthflow works well when teams want logic control but do not want to build infrastructure from scratch.

3. Retell AI

Retell AI is optimized around latency and reliability. In practice, this shows up most clearly when callers interrupt or speak quickly. Sub-second response times reduce awkward overlaps and improve perceived intelligence.

Its API-first design appeals to engineering teams that want to embed calling directly into existing systems. Retell is often used where calls trigger or depend on internal logic, such as checking records, validating information, or escalating based on content.

Compliance support, including HIPAA readiness, makes it viable in regulated industries. The tradeoff is accessibility. Without technical resources, teams may struggle to deploy or iterate quickly.

Retell is chosen when control and performance outweigh ease of setup.

4. Vapi

Vapi offers one of the most configurable environments in this category. Teams can select their own speech recognition engines, language models, and voice providers, then stitch them together into a unified calling system.

This modularity allows deep tuning. Industries with specific vocabulary, compliance flows, or call logic benefit from the ability to control each layer independently. Vapi does not force opinionated defaults, which experienced teams value.

That freedom comes with responsibility. Poor model selection or misconfigured latency budgets can degrade call quality quickly. Vapi requires careful testing, monitoring, and iteration to perform well.

Vapi is best suited for teams that already understand voice AI tradeoffs and want ownership over every decision.

5. Talkdesk

Talkdesk approaches automation from a contact center legacy perspective. Rather than replacing agents, it augments existing operations with AI-driven routing, dialing, and analytics.

Predictive dialing and workforce tools remain central, with AI layered on top for summaries, intent tagging, and partial automation. This makes Talkdesk a natural fit for enterprises that already operate call centers and want incremental automation without re-architecting workflows.

The platform carries more overhead than AI-first tools. Configuration, licensing, and operational cost can be prohibitive for small teams or experimental deployments.

Talkdesk works when automation needs to coexist with large human teams and established processes.

Regulation is not a footnote anymore

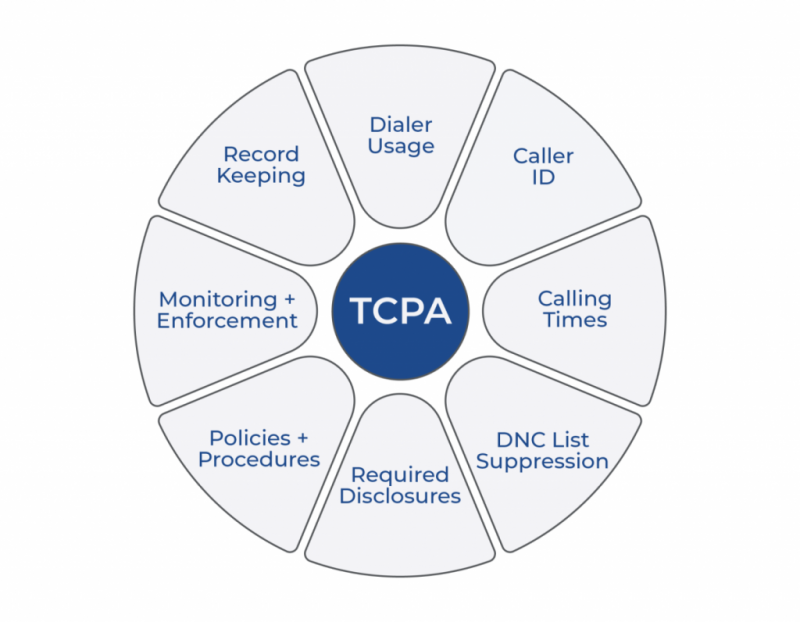

Automated phone calling sits under increasing scrutiny. In the United States, TCPA rules restrict unsolicited calls, especially those using artificial or prerecorded voices. Consent tracking is not just a checkbox. It needs to be logged, retrievable, and defensible.

STIR/SHAKEN has changed how caller ID trust works, and failure to authenticate calls now directly impacts answer rates. Similar frameworks exist globally, from India’s TRAI requirements to GDPR consent rules in the EU. Systems that do not treat compliance as a first-class feature tend to create risk rather than savings.

Where this is going, and where it still breaks

By 2026, a meaningful portion of routine service calls are fully automated. Conversational agents outperform static IVR trees, and integration with chat and email systems is becoming standard. Agentic AI is starting to handle multi-step tasks like rescheduling or follow-ups without human review.

At the same time, scam fatigue has made people more suspicious of unknown callers. Blocking technologies continue to evolve, and any automated system that behaves aggressively is filtered quickly. The future is less about volume and more about precision, timing, and context.

Closing perspective

Automated phone calling in 2026 is best understood as infrastructure, not a growth hack. When it works, it fades into the background. Calls happen at the right moment, say what needs to be said, and end without friction. When it fails, it feels intrusive fast.

The teams getting value are not chasing volume. They are designing narrow call paths, auditing outcomes, and treating consent as a system requirement, not a legal afterthought. The technology is mature enough now that the difference is no longer model quality or voice realism. It is judgment. Knowing which calls should exist at all, and which ones should never be automated in the first place.

In that sense, automated phone calling has stopped being about replacing humans. It has become about protecting human attention, on both sides of the line.

Post Comment

Be the first to post comment!