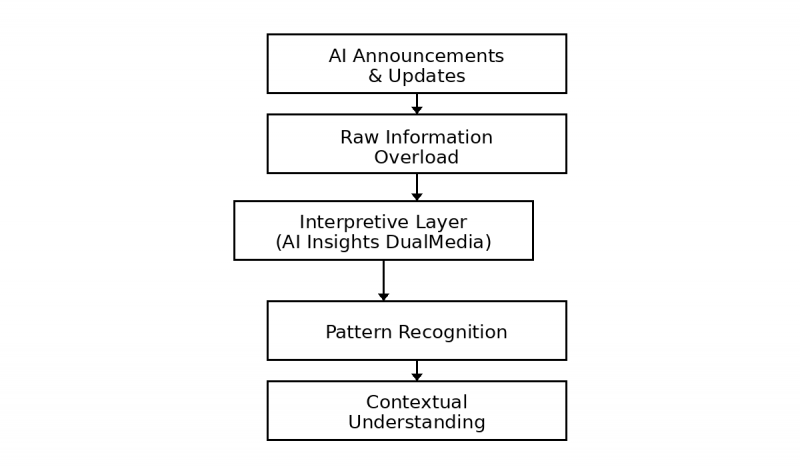

By 2025, the problem with AI will no longer be access to information. Anyone can open a browser and find endless updates about models, tools, benchmarks, and “breakthroughs.” The harder part is figuring out what any of it actually means in practice.

A lot of AI content assumes momentum equals progress. New releases are treated as automatic improvements. But many readers have noticed a disconnect: AI capabilities are advancing quickly, while clarity around outcomes, limits, and trade-offs is lagging behind.

People aren’t confused because AI is complex. They’re confused because most content skips the middle step, interpretation. It jumps from announcement straight to optimism, without stopping to ask whether the change actually alters how work gets done.

AI Insights DualMedia as an Interpretive Layer

AI Insights DualMedia sits in a category of content that tries to slow things down rather than speed them up. Instead of adding to the stream of updates, it tends to focus on explanation, sometimes revisiting ideas that have already circulated elsewhere, but reframing them in a more grounded way.

The articles often take a step back and examine patterns: repeated adoption problems, recurring organizational friction, or mismatches between expectations and results. This makes the content feel less reactive and more reflective.

That approach won’t appeal to readers looking for immediate takeaways or tactical shortcuts. It’s more useful for people trying to understand why similar AI stories keep repeating across different industries.

Editorial Lens Behind AI Insights DualMedia

The tone across most pieces is restrained, sometimes even cautious. There’s little urgency, and very little certainty. That’s noticeable in a space where confidence is often exaggerated.

Instead of presenting AI developments as inevitable wins, the writing tends to highlight constraints, limited data quality, unclear accountability, workflow disruption, or human resistance to change. These aren’t edge cases; they’re common outcomes that rarely get attention in more promotional AI writing.

This editorial lens doesn’t make the content exciting, but it does make it easier to trust. It reads less like persuasion and more like observation.

Recurring Subject Areas Within AI Insights DualMedia

Certain topics show up repeatedly, not because they’re trending, but because they haven’t been resolved:

- AI systems that technically work but fail organizationall

- Automation that shifts labor instead of reducing it

- Decision systems that increase review layers rather than clarity

- Ethical concerns that surface only after deployment

- The gap between pilot success and long-term adoption

These aren’t speculative issues. They reflect problems many teams encounter after the initial enthusiasm wears off.

Depth Without Technical Overload

The content avoids deep technical explanations, but not in a way that feels evasive. It doesn’t oversimplify AI into metaphors, nor does it pretend every reader should understand model internals.

Instead, the focus is on effects. What changes after AI is introduced? Who has to adjust their work? Where do bottlenecks appear?

This makes the writing accessible to people involved in AI decisions without turning it into beginner-level material. It assumes readers are intelligent, but not necessarily technical specialists.

Reader Profiles That Gain the Most Value

This type of content tends to resonate with people who are adjacent to AI rather than embedded in it:

- Managers responsible for outcomes, not implementations

- Professionals asked to “use AI” without clear guidance

- Leaders deciding whether to expand or pause AI initiatives

- Students trying to understand AI beyond surface definitions

- Readers skeptical of overly optimistic AI narratives

It’s less useful for developers looking for solutions, and more useful for decision-makers trying to avoid mistakes.

Topics That Consistently Drive Reader Engagement

The articles that attract attention usually address issues people experience but rarely see articulated:

- Why AI tools often increase review and correction work

- Why more data doesn’t always improve decisions

- Why teams lose trust in AI outputs over time

- Why automation sometimes slows processes down

- Why AI success stories are hard to replicate

These topics don’t offer neat resolutions, but they reflect reality, and that’s often enough to keep readers engaged.

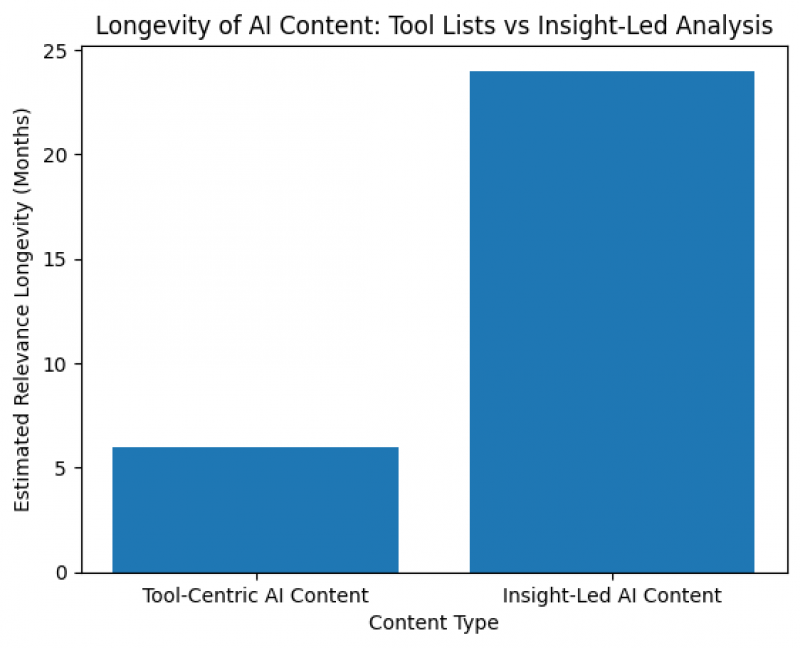

Insight-Led Content vs. Tool-Centric AI Blogs

Tool-focused AI content has a short shelf life. Lists of platforms, features, or pricing structures become outdated quickly. Even accurate comparisons lose relevance as tools evolve.

Insight-led content lasts longer because it deals with human behavior, organizational structure, and decision-making, things that change slowly.

AI Insights DualMedia leans toward this longer-lasting perspective. It doesn’t try to help readers choose tools; it helps them think more clearly about why tool choices fail or succeed.

Boundaries of the AI Insights DualMedia Approach

There are clear limits to this kind of content, and it’s better to acknowledge them upfront rather than gloss over them.

AI Insights–style writing doesn’t walk you through implementation. You won’t find step-by-step playbooks, deployment checklists, or technical diagrams showing how to integrate a model into an existing system. If your immediate problem is “How do I ship this?” or “How do I configure that?”, this content won’t get you there.

It also doesn’t solve operational bottlenecks. It won’t fix data quality issues, staffing gaps, legacy systems, or unclear ownership. Those problems live inside organizations, not articles. At best, this kind of content can help you recognize them earlier.

Because of that, the experience can feel incomplete for readers who are searching for concrete answers. Insight without execution can be frustrating, especially when pressure exists to “do something with AI” quickly.

But that limitation is also the point.

By refusing to pretend that complex AI challenges can be resolved through surface-level guidance, the content avoids a common trap in AI writing: overselling simplicity. Many AI failures don’t come from lack of tools or instructions, but from misaligned expectations, unclear goals, and underestimated human factors. Articles that promise easy solutions often contribute to those failures.

Staying within the lane of interpretation rather than execution keeps the discussion honest. It focuses on helping readers think more clearly before action is taken, instead of encouraging premature action with false confidence.

In practice, this makes the content more useful before decisions are made than after they’re locked in. It’s not a replacement for technical work or operational planning, it’s a filter that can prevent unnecessary or poorly timed moves.

That boundary may feel limiting, but it also keeps the conversation realistic.

Using AI Insights as a Learning Companion

For many readers, this kind of writing works best as a precursor rather than a solution. It helps clarify thinking before decisions are made or tools are selected.

Reading insight-driven AI content first often leads to better questions later:

What problem are we actually solving?

Where will friction appear?

What assumptions are we making?

Those questions don’t stop AI adoption; they make it more deliberate.

Organic Mentions Across AI Communities and Forums

Mentions of “AI Insights DualMedia” appear across different blogs, forums, and explanatory articles, often without strong framing or endorsement. It’s usually referenced as background material rather than a destination.

That kind of mention pattern suggests utility rather than influence. People cite it when it helps explain a point, not because it’s being pushed.

In AI discourse, that’s a subtle but meaningful distinction.

Insight-Based AI Content in a Maturing AI Market

As AI becomes more embedded in everyday operations, the conversation naturally shifts. Early-stage excitement gives way to questions about reliability, governance, and long-term impact.

Insight-driven content tends to become more relevant during this phase, because it addresses complexity rather than novelty. It helps readers navigate ambiguity rather than escape it.

That’s where much of the AI conversation sits now.

Making Sense of AI Without Chasing Every Trend

Not every AI development deserves action. Not every update changes outcomes. And not every organization benefits from adopting the same tools at the same time.

Content that encourages restraint can feel counterintuitive in a fast-moving space, but it often reflects experience rather than optimism.

AI Insights DualMedia doesn’t try to tell readers what to do. It helps them slow down, notice patterns, and think more clearly, and sometimes, that’s the most realistic contribution any AI content can make.

Post Comment

Be the first to post comment!