In 2025, it’s no longer a question of if AI can help developers—it’s about which AI does it best. LLMs have become the behind-the-scenes brains of everything from autocomplete to test case generation. But with so many options—each claiming to be the smartest, fastest, or most accurate—it’s hard to know where to begin.

Before diving into code with the latest model, let’s pause and make sense of the growing LLM landscape developers are facing. After all, according to Statista, AI usage has already permeated nearly every stage of the development lifecycle—from planning and debugging to testing and documentation.

A Growing List of Options, But No Clear Front-Runner

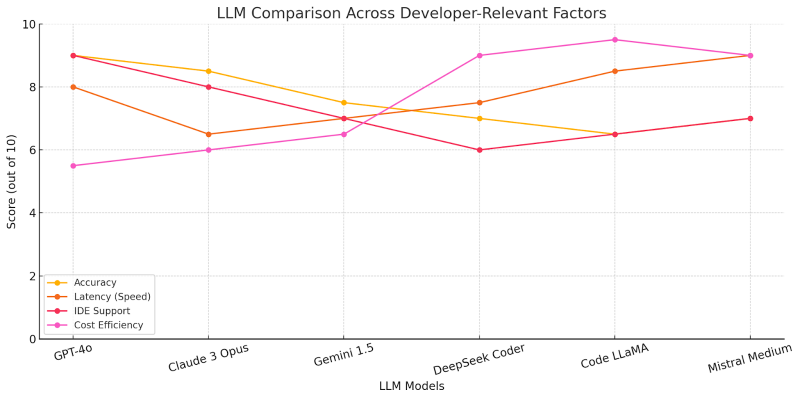

You’ve likely heard of Claude, GPT-4o, Gemini, DeepSeek, and Code LLaMA—each touted for its own set of strengths. Some models output impressively readable code. Others shine in low-latency performance or niche language support. But “popular” doesn’t always mean “useful”—and the real question is rarely about power. It’s about fit.

And as John Werner notes in Forbes, the rise of AI in coding isn’t just about automation—it’s about redefining how developers think, collaborate, and create.

So, how do you choose? Comparing them side-by-side is just the first step.

Let’s explore what criteria developers actually care about—and why most blog rankings fall short.

Why Speed, Accuracy, and Cost Alone Don’t Tell the Full Story

Sure, GPT-4o might write a flawless backend API, and Claude may generate beautifully structured Python functions—but do they integrate well with your IDE? Can they follow your team’s architecture? Are they affordable to use at scale?

For developers working under real-world constraints, choosing a model is a balance between performance and practicality. And that’s where most listicles, benchmarks, and vendor docs fail—they focus on specs, not the context in which those specs matter.

Even HubSpot’s overview of AI code writing tools acknowledges that choosing the “right” AI isn’t about flashiest features—it’s about how naturally it fits into your development process.

So, instead of browsing isolated model reviews, imagine if there were a resource built around how you work.

Developers Are Looking for More Than Leaderboards

The truth is, developers aren’t asking “which model is objectively best?”—they’re asking things like:

- Which model generates clean code in my stack?

- Which one doesn’t hallucinate functions that don’t exist?

- Which works reliably inside my IDE without weird workarounds?

Reddit discussions, GitHub issues, OpenRouter rankings—they’re full of these questions. But finding a consistent, well-rounded answer in one place? That’s rare.

That’s why many devs are now turning to best coding LLM—a developer-focused guide that connects the dots between community feedback, hands-on use, and actual workflows.

Comparing Top Models Still Misses the Point

Claude might be perfect for documenting code. GPT-4o could be better at debugging complex logic. Gemini might shine in frontend-heavy projects. But none of these win in every category—and trying to rank them linearly oversimplifies the complexity of real development.

That’s why the most useful approach is one that evaluates where each model fits best. The WebJuice guide doesn’t crown a single winner—it shows you how different models excel depending on your task, tooling, and team size.

And some tools—like CICI AI, which focuses on empathetic, human-like interaction—demonstrate that success can look wildly different depending on your use case. It's not just about writing syntax; it’s about how these tools think with you.

Speaking of tooling—let’s talk about where LLMs meet your dev environment.

Workflow Compatibility Matters as Much as Intelligence

You don’t code in a vacuum. If an LLM can’t integrate into your daily workflow—be it VS Code, Jupyter, or CI pipelines—it won’t save you time, no matter how smart it is. Some models require API keys and setup gymnastics. Others work right out of the box with plugins and browser extensions.

That’s another area where the WebJuice guide proves helpful—it flags not just what models can do, but how easily they plug into the tools you already use.

And once that LLM is up and running, it’s the real tasks that reveal its strengths.

Use Cases Tell the True Story

LLMs shine (or stumble) when put to practical use. Think:

- Writing unit tests that actually compile

- Refactoring without breaking dependencies

- Explaining legacy code without hallucination

- Annotating functions in readable, useful English

These aren’t bonus features—they’re where developers spend the bulk of their time. Choosing a model that nails these workflows is far more impactful than choosing one that scores high on abstract reasoning tests.

So instead of sorting models by architecture or training size, you need a comparison that shows you what works where it counts.

Programming Language Support Can Be a Dealbreaker

Many devs assume all LLMs handle Python perfectly. But what about JavaScript? Rust? Go? While some models stick to mainstream languages, others are surprisingly adept in niche ecosystems.

Knowing which LLM supports your language stack isn’t always easy to find—but it’s one of the first things the WebJuice guide lays out clearly. It skips the buzzwords and simply tells you: “Use this for Python, that for TypeScript, avoid this one for C++.”

Because again, context beats hype.

Final Thoughts: The Best Isn’t a Model—It’s the Match

In the end, there’s no universal “winner.” Your ideal coding LLM depends on your tech stack, your workflow, and your priorities. Whether you’re building with a team, freelancing, or just experimenting, the answer isn’t found in a top-5 list—it’s found in an evaluation that respects the complexity of how developers actually build.

Post Comment

Be the first to post comment!