Viggle AI’s physics-aware 3D video foundation model (JST-1) is unlocking new motion possibilities for creators—here’s how it works, why it matters, and what to watch.

Introduction

Imagine uploading a still photo of your character, typing “dance break → pop-lock-spin”, and getting a video of that character busting moves with natural fluidity. That’s what Viggle AI offers.

Powered by its in-house model JST‑1, the platform adds physics-aware motion to static avatars, photos or videos. For creators chasing short-form virality, it’s a game-changer.

Feature Deep Dive

What JST-1 Brings to the Table

- Physics understanding: motions respect balance, gravity, and momentum.

- 3D video foundation model: beyond 2D filters, it enables realistic movement.

- Neural graphics engine feels: you feed a prompt + image/audio, you get animated output.

- This gives Viggle a first-mover edge among AI video tools.

How Users Actually Use It

- Move: static image becomes a dancing figure while the background stays.

- Rap: characters lip-sync to custom or AI-generated lyrics.

- Mic: voice-to-motion and voice clone: character sings or talks based on audio.

- Animate (upcoming): full image-to-video transformation.

For creators on platforms like TikTok/Instagram, this means fewer hours editing and more time iterating on trends.

Community & Growth Metrics

Over 40 million users register on the platform’s ecosystem.

- Vibrant Discord community: challenges, motion-template sharing, and creator engagement.

- Monthly active creators: rapidly scaled from ~1 million in late 2023 to tens of millions by mid-2025.

- Engagement data: ~68% of active users create content at least twice weekly; ~35% monetize their output.

These stats show Viggle isn’t just a novelty, it’s tapping into creator economy dynamics.

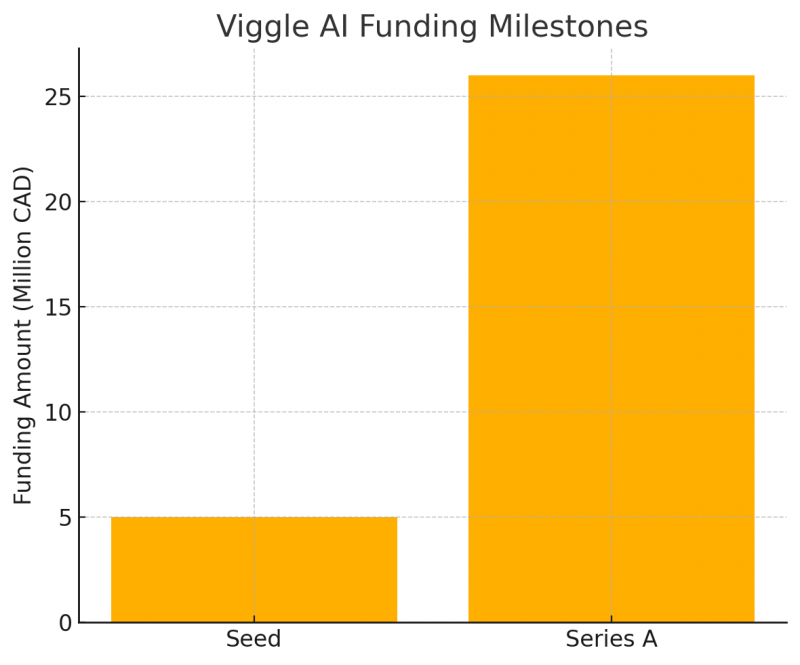

Funding & Market Positioning

In August 2024, Viggle closed a CAD $26 million Series A to scale its infrastructure and expand applications beyond memes into music, VFX, advertising, and virtual entertainment. With backing from top-tier investors and a niche that blends motion control with creator autonomy, Viggle is positioning itself as a leader in the GenAI video-creation segment.

Why It Matters to Creators & Brands

For creators

- Shorter production time: instead of key-frames, rigging, compositing, upload, prompt,and render.

- Trend-responsive output: dance challenge today, branded skit tomorrow.

- Lower technical barrier: mobile/web access means you don’t need a full studio.

For brands & studios

- The technology can be repurposed for quick-turn social content, VFX previsualization, and avatar-based marketing.

- Motion templates scale across campaigns: same character with new action, audio, voice.

Limitations & Considerations

- Some advanced templates/features reside behind premium tiers; the free tier limits motion variety or export options.

- While JST-1 adds realism, complex scenes (e.g., many interacting characters, detailed backgrounds) may still require manual polish.

- Ethical use: with lip-sync and voice-clone features, responsible policy and content moderation become essential.

- Export resolution and commercial rights may vary by subscription.

Future Roadmap & Strategic Opportunities

- Viggle Animate: broader image-to-video transformation opens doors beyond short-form viral content, think short films, interactive avatars.

- API/Developer access: when creators plug Viggle motion generation into other apps/platforms, ecosystem effects amplify.

- Voice clone + full performance capture: if rollout succeeds, creators could animate avatars with full dialogue and singing, uploaded straight to social.

- Enterprise licensing: brands may adopt JST-1-powered functionalities for internal content creation.

Context: How Viggle AI Compares

Several tools exist for AI-generated or AI-assisted video (e.g., Runway, Pika Labs), but the key differentiator for Viggle is motion controllability + physics awareness + creator-friendly interface.

It’s less about full cinematic generation and more about empowering creators with motion control in a lightweight workflow

| Feature / Tool | Viggle AI | Runway | Pika Labs | Kaiber |

| Physics-Aware Motion | ✅ Yes | ❌ No | ❌ No | ❌ No |

| Real-Time Motion Control | ✅ Yes | ❌ No | ✅ Partial | ❌ No |

| Creator Community Integration | ✅ Strong (Discord, 40M+ users) | ❌ Limited | ❌ Limited | ❌ Limited |

| Text / Image to Animation | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes |

| Cinematic / Film Focus | ❌ Not primary | ✅ Yes | ✅ Yes | ✅ Yes |

| Accessibility (Web + Mobile) | ✅ Yes | ✅ Yes | ✅ Yes | ✅ Yes |

| Ideal For | Content Creators, Meme Artists, Social Media | Filmmakers, Editors | Short-form Creators | Music Visuals |

Quick Take: Pros & Cons

| ✅ Strengths | ⚠️ Weaknesses |

| Realistic motion via physics-aware model | Advanced features locked behind paywall |

| Mobile/web accessible, creator-friendly | Complex scenes may still need manual work |

| Strong creator community & momentum | Export resolution / commercial rights may vary |

| Rapid trend-focused output capability | Ethical moderation responsibilities grow |

Final Thoughts

What this really means: Viggle AI isn’t just another filter or gimmick. It’s a shift toward motion as a creative starter, not an afterthought. For creators, that means more experiments, faster turnaround, and a higher chance at virality. For brands, it means making dynamic visuals without ramping up full production.

If the roadmap executes as planned, we could see a standard workflow where you upload a photo, pick motion, add voice, hit render, and you’ve got content perfect for the feed. That kind of agility is powerful in today’s creator economy.

Post Comment

Be the first to post comment!