Table of Content

- Who Created DeepSeek AI? Origins, Vision, and the Team Behind It

- What Makes DeepSeek AI Different from ChatGPT or Gemini?

- Inside DeepSeek Chat: A Walkthrough of the Web Experience

- DeepSeek AI on Mobile: Is the App as Powerful as the Web Version?

- Open Models, Open Ambitions: DeepSeek R1 for Developers

- DeepSeek vs. ChatGPT: Which One Handles Your Prompts Better?

- DeepSeek AI Reviews: From Reddit Threads to Trustpilot Ratings

- Under the Hood: DeepSeek R1 Performance and Benchmarks Explained

- How DeepSeek AI Understands You: Language Processing Demystified

- Is DeepSeek AI Just Hype? What the Media’s Saying Globally

- What’s Next for DeepSeek AI? Clues from GitHub and X (Twitter)

- Who Should Switch to DeepSeek—and Who Shouldn’t

- Power Tips to Maximize DeepSeek AI for Writing, Coding, and More

- Does DeepSeek AI Support Plugins, Memory, or Web Browsing Yet?

- Can You Trust DeepSeek AI with Sensitive Data? Privacy & Safety Check

- How to Fine-Tune or Train DeepSeek AI for Your Workflow

- DeepSeek AI for Students: Better Notes, Research, or Just Distraction?

- What DeepSeek AI Still Gets Wrong: Limitations to Be Aware Of

- Comparing the UX: DeepSeek Chat vs ChatGPT vs Claude vs Gemini

Back in early 2024, while everyone was still caught up in the ChatGPT vs. Gemini showdown, a lesser-known name began surfacing in Reddit threads, GitHub drops, and side-by-side YouTube demos: DeepSeek AI. No flashy press release, no billion-dollar headline—but slowly, steadily, it started to spark curiosity.

By January 2025, the question wasn’t “Have you heard of DeepSeek?” It was “Wait, is DeepSeek better than GPT-4?”

So… who built it, what can it do, and is it really worth switching over from your favorite chatbot?

Let’s break it down.

Who Created DeepSeek AI? Origins, Vision, and the Team Behind It

DeepSeek AI is developed by a Chinese research and engineering team, focused on building open-source large language models that can rival U.S. giants. There’s no Elon or Sam Altman figurehead here—just a low-profile group of developers letting their work speak.

The company maintains a strong open-dev presence:

- GitHub: deepseek-ai

- LinkedIn: DeepSeek AI Company Page

- HuggingFace: DeepSeek R1 model

This approach has helped them build trust in the developer community, especially those looking for open alternatives to proprietary models.

What Makes DeepSeek AI Different from ChatGPT or Gemini?

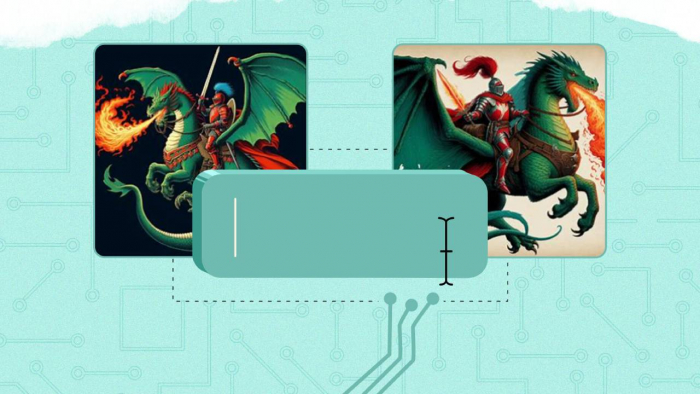

To most users, DeepSeek AI looks like another GPT-style chatbot. But spend 15 minutes with it, and the differences start surfacing.

_1753787713.png)

According to India Today, the LLM powering DeepSeek performs especially well in math, multilingual reasoning, and academic-style prompts.

What sets it apart:

- Open architecture: You can explore and even test the DeepSeek R1 model.

- Performance stability: No lag spikes or timeout errors, even during peak hours.

- Privacy-respecting UI: Clean interface, no ads, no tracking (unlike some popular freemium apps).

It doesn’t have web browsing or plugin ecosystems like ChatGPT Plus, but what it does—it does fast and reliably.

Inside DeepSeek Chat: A Walkthrough of the Web Experience

Log on to chat.deepseek.com, and you're greeted with a minimal UI. Just a prompt box and response panel. You can sign up instantly, with no credit card or waitlist required.

It supports:

- Style settings: casual, formal, creative, technical

- Clear chat memory and session export

- Lightning-fast response times, especially for factual or code-heavy prompts

One Redditor called it “ChatGPT-3.5’s brain in a Claude body with Gemini’s speed.” That’s a wild combo—and not entirely inaccurate.

DeepSeek AI on Mobile: Is the App as Powerful as the Web Version?

Mobile versions of DeepSeek are available on both:

Both versions mirror the web UI closely.

_1753787968.png)

What’s notable:

- App size is small (~50MB on Android)

- Smooth background task handling

- Battery consumption is minimal

- Doesn’t log you out after inactivity (a big plus for regular users)

And unlike ChatGPT’s mobile version, DeepSeek keeps all core features free with no Plus-tier upsell… for now.

Open Models, Open Ambitions: DeepSeek R1 for Developers

For devs and researchers, DeepSeek’s true power lies in its open release of DeepSeek R1. It’s:

- A decoder-only model

- Trained on 2T tokens

- Tuned for multilingual, STEM, and code reasoning

GitHub also hosts inference scripts, fine-tuning recipes, and tokenizer setups —making it a favorite among independent builders and AI tinkerers.

DeepSeek vs. ChatGPT: Which One Handles Your Prompts Better?

On the surface, DeepSeek feels like a hybrid of GPT-3.5 and Claude 2. It responds quickly, handles factual queries well, and makes fewer hallucinations with technical inputs.

Based on user comparisons:

- ChatGPT is better at roleplay, creativity, and general “vibes.”

- DeepSeek is better at math, translations, and research-heavy prompts.

- Claude 3 still leads for structured logic and nuanced writing.

Reddit discussions like this one offer mixed opinions, with some calling it “mid,” others calling it “scary good.” Quora comparisons also echo the same:

DeepSeek AI Reviews: From Reddit Threads to Trustpilot Ratings

Let’s talk feedback.

On Trustpilot, DeepSeek holds a mixed rating. Users love the speed and stability, but some report issues with long-term session memory and minor translation quirks.

SlideSpeak’s review noted that “DeepSeek often produces more precise answers on technical queries than ChatGPT 3.5.”

Reddit threads vary—from praise for its minimal UI to skepticism over its claim to GPT-4-level capabilities.

It’s still early days, but the general verdict? Fast, reliable, and promising—with some room to grow.

Under the Hood: DeepSeek R1 Performance and Benchmarks Explained

According to HuggingFace, the R1 model was benchmarked against major tasks like:

- MMLU (Massive Multitask Language Understanding)

- HumanEval (code generation)

- GSM8K (grade school math)

Early performance suggests it’s on par with GPT-3.5 and Claude Instant in many benchmarks, with noticeable gains in Chinese-English dual reasoning tasks.

How DeepSeek AI Understands You: Language Processing Demystified

Unlike GPT-4, which uses mixture-of-experts and various embedding techniques, DeepSeek uses a more monolithic transformer-based decoder architecture.

In real terms?

- It’s great at holding short-term context (up to ~8K tokens)

- It prioritizes token probability using domain-specific weights

- Responses are shorter but sharper when prompted correctly

Is DeepSeek AI Just Hype? What the Media’s Saying Globally

From BBC to NYT, coverage has ranged from “a rising alternative in a ChatGPT world” to “possibly China’s strongest play in the AI race.”

TechTarget’s explainer dives into the architectural transparency and its implications for global AI competition.

What’s Next for DeepSeek AI? Clues from GitHub and X (Twitter)

From GitHub issues to X posts like @deepseek_ai, it’s clear the team is planning:

Larger context window (up to 32K tokens)

Vision and image input support

APIs for developers with rate-limited free tiers

Watch their GitHub closely—the pace of development is accelerating fast.

Who Should Switch to DeepSeek—and Who Shouldn’t

Great for you if:

- You’re a developer or researcher needing fine-tuned outputs

- You care more about accuracy than personality

- You want a free, fast, no-fuss GPT alternative

Maybe skip for now if:

- You want plugins, browsing, or app integrations

- You need memory persistence and conversational depth

- You use AI for storytelling, branding, or creative projects

Power Tips to Maximize DeepSeek AI for Writing, Coding, and More

- Use “precise” or “technical” style mode for structured tasks

- Break down long prompts into bullet form—DeepSeek responds bette

- For coding help, add “explain line-by-line” in your prompt

- Use it as a dual-checker alongside ChatGPT for translations and facts

Does DeepSeek AI Support Plugins, Memory, or Web Browsing Yet?

Short answer: No.

As of mid-2025, DeepSeek is focused on fast core chat with no external plugin system, memory threads, or browsing capability. But this also means fewer bugs and better reliability.

Can You Trust DeepSeek AI with Sensitive Data? Privacy & Safety Check

DeepSeek doesn’t collect user data for ads, and no trackers run in the web version However:

- There’s no statement on HIPAA/GDPR compliance

- Sessions are stored temporarily but not user-identifiable

- Great for general use, but not for private client info or medical chats

How to Fine-Tune or Train DeepSeek AI for Your Workflow

_1753788089.png)

Using the GitHub codebase and HuggingFace weights, you can:

- Run local inference

- Fine-tune on your dataset

- Deploy via Docker for private LLM use

This makes it a strong candidate for enterprise and academic custom projects.

DeepSeek AI for Students: Better Notes, Research, or Just Distraction?

Student communities on Reddit have already found value:

- Summarizing lectures

- Translating academic papers

- Solving math problems step-by-step

But since it lacks a citation engine or persistent memory, it’s not great for long research papers or bibliographies.

What DeepSeek AI Still Gets Wrong: Limitations to Be Aware Of

Weaknesses noted by users:

- Repeats itself in long chats

- Struggles with highly emotional or poetic tasks

- Can hallucinate citations or stats if not prompted tightly

- No document upload, images, or voice/chat input support yet

Comparing the UX: DeepSeek Chat vs ChatGPT vs Claude vs Gemini

| Feature | DeepSeek AI | ChatGPT | Claude 3 | Gemini Advanced |

| Speed | Fast | Moderate | Fast | Fast |

| Plugins | Not yet | Yes | No | Yes |

| Memory | No | Plus | Yes | Yes |

| Multilingual | Strong | Moderate | Strong | Strong |

| Free Access | Yes | (GPT 3.5) | Yes | Yes |

Final Thoughts

After spending significant time with DeepSeek AI—testing it for writing, coding, research, and even language translation—it’s clear that this isn’t just another chatbot clone. DeepSeek doesn’t try to be everything. Instead, it focuses on doing a few core things exceptionally well: factual accuracy, technical reasoning, and blazing-fast response times.

If you’re someone who:

- Writes academic or research content

- Codes regularly and wants step-by-step explanations

- Translates or switches between languages in your workflow

- Prefers clean interfaces without marketing noise or upsells

—then DeepSeek will feel like a breath of fresh air. It boots fast, works fast, and rarely breaks—even when others lag or timeout.

But let’s be honest: it’s not perfect. If you need memory across chats, access to tools like web browsing or plugins, or are doing complex storytelling or creative branding, ChatGPT Plus or Claude might still serve you better. DeepSeek also lacks document upload and persistent session features—so it's not ideal for long-term research or creative planning.

Yet despite those gaps, what makes DeepSeek stand out is its developer-first openness and reliability. You can literally inspect the model, fine-tune it, or self-host it—something almost no mainstream chatbot allows. It’s the kind of tool you’d trust more the deeper you dive.

So here’s the takeaway:

- For devs, researchers, and AI-savvy users, DeepSeek isn’t just an alternative—it’s an upgrade in many ways.

- For casual users or creatives, it’s a strong secondary tool that will likely surprise you with how sharp and fast it is.

- In a world full of LLM hype, DeepSeek stays quiet—and just delivers.

Post Comment

Be the first to post comment!